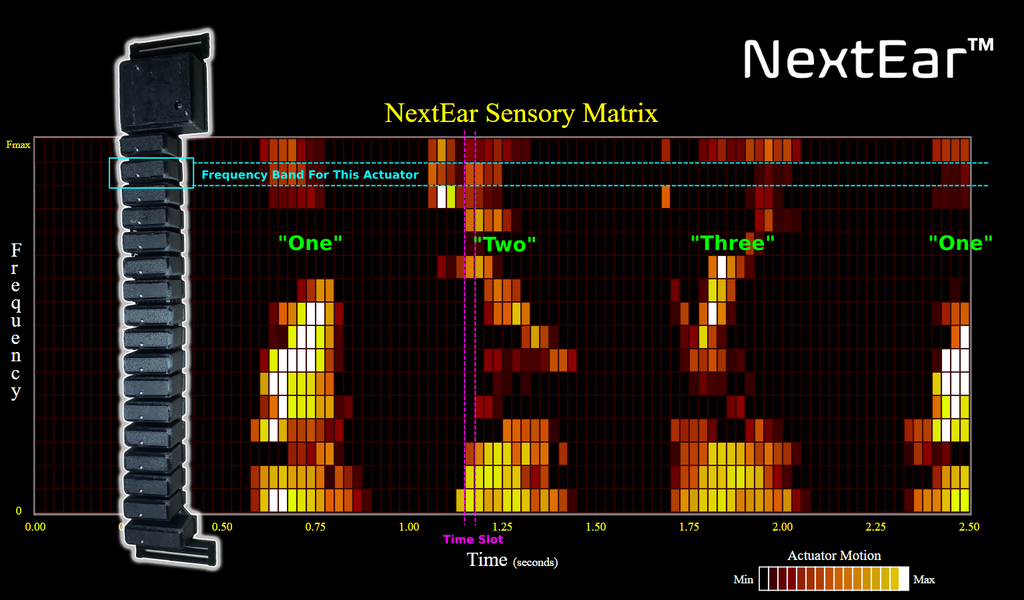

Example analysis showing how sensory actuators on the NextEar haptic belt move with time and frequency changes

This graph shows how the NextEar haptic belt updates its 16 sensory actuators based on incoming sound. While NextEar is receiving sound, the frequency-based actuators on the haptic belt are updated at each time slot in real-time - with very little latency. NextEar employs the use of Digital Signal Processing (DSP) to quickly move/update its actuators based on the incoming sound, resulting in unique patterns transmitted to the user. The user is then able to feel the actuators change as the incoming sound is processed continuously.

The example graph above was actually produced onboard NextEar as it was operating, with the input sound being speech (specifically, the digits 'one', 'two', 'three', and 'one' were said in this graph). Additional examples are shown below - click on an image to see it full size.